Nylah Anderson

A 10-year-old girl choked herself to death late last year to take part in TikTok’s viral “Blackout Challenge,” her mother alleges in a heartbreaking federal lawsuit.

Though barely a teenager, Nylah Anderson spoke three languages, and her mother Tawainna Anderson blames TikTok’s algorithm for cutting her young life tragically short late last year. TikTok put the challenge on the girl’s “For You Page,” according to the lawsuit.

The mother sued TikTok and its owner ByteDance in the Eastern District of Pennsylvania on Thursday.

“This disturbing ‘challenge,’ which people seem to learn about from sources other than TikTok, long predates our platform and has never been a TikTok trend,” a TikTok spokesperson said when the story first broke in December. “We remain vigilant in our commitment to user safety and would immediately remove related content if found. Our deepest sympathies go out to the family for their tragic loss.”

TikTok’s community guidelines bar dangerous acts or challenges, and the popular app—downloaded more than 130 million times in the United States—is billed for people over the age of 13, though it has a limited app for younger users.

“Programming Children for the Sake of Corporate Profits”

On Dec. 7, Tawainna said that she found her daughter unconscious and hanging in her bedroom closet by her neck from the purse strap and rushed her to the emergency room. Some five days later, Nylah Anderson was dead, and she was not the only child killed by a dangerous, viral social media phenomenon, according to the lawsuit.

“The TikTok Defendants’ algorithm determined that the deadly Blackout Challenge was well-tailored and likely to be of interest to 10-year-old Nylah Anderson, and she died as a result,” the 46-page complaint alleges. “The TikTok Defendants’ app and algorithm are intentionally designed to maximize user engagement and dependence and powerfully encourage children to engage in a repetitive and dopamine-driven feedback loop by watching, sharing, and attempting viral challenges and other videos. TikTok is programming children for the sake of corporate profits and promoting addiction.”

TikTok [Photo illustration by OLIVIER DOULIERY/AFP via Getty Images]

The lawsuit lists other fatalities that reportedly resulted from the “Blackout Challenge”: including another 10-year-old girl in Italy on Jan. 21, 2021; 12-year-old Joshua Haileyesus on March 22, 2021; a 14-year-old boy in Australia on June 14, 2021; and a 12-year-old boy from Oklahoma in July 2021. All of these children allegedly learned about the challenge on their “For You Page.”

That string of young deaths should have pushed TikTok to intervene, the mother says.

“The TikTok Defendants knew or should have known that failing to take immediate and significant action to extinguish the spread of the deadly Blackout Challenge would result in more injuries and deaths, especially among children, as a result of their users attempting the viral challenge,” the lawsuit states.

The mother said TikTok’s algorithm presented multiple “Blackout Challenges” for her daughter, including one prompted her to use plastic wrap around her neck and holding her breath. But she says that the one that killed the 10-year-old came days later.

“The particular Blackout Challenge video that the TikTok Defendants’ algorithm showed Nylah prompted Nylah to hang a purse from a hanger in her closet and position her head between the bag and shoulder strap and then hang herself until blacking out,” the lawsuit states.

“Dangerously Defective Social Media Products”

According to the complaint, the daughter reenacted that challenge in her mother’s bedroom closet while the mother was downstairs.

“Tragically, after hanging herself with the purse as the video the TikTok Defendants put on her FYP showed, Nylah was unable to free herself,” the lawsuit states. “Nylah endured hellacious suffering as she struggled and fought for breath and slowly asphyxiated until near the point of death.”

The mother says she found her daughter there and administered “several rounds of emergency CPR” in a “futile” effort to resuscitate her until emergency responders arrived, and three day of medical care at Nemours DuPont Hospital in Delaware could not save her from her injuries.

Social media companies typically avoid civil liability for dangerous messages on their platforms via Section 230 of the Communications Decency Act, which immunizes internet platforms for what third parties post. This lawsuit claims to vault that hurdle by targeting the broader structure that brought the challenge to the girl’s feed.

“Plaintiff does not seek to hold the TikTok Defendants liable as the speaker or publisher of third-party content and instead intends to hold the TikTok Defendants responsible for their own independent conduct as the designers, programmers, manufacturers, sellers, and/or distributors of their dangerously defective social media products and for their own independent acts of negligence as further described herein,” the complaint states. “Thus, Plaintiffs claims fall outside of any potential protections afforded by Section 230(c) of the Communications Decency Act.”

Often described as the backbone of free speech on the internet, Section 230 has come under attack from both poles of the political spectrum. Former President Donald Trump and other politicians on the political right have blamed the statute, often erroneously, for supposedly enabling censorship by permitting social media companies not to face litigation for moderating content. On the political left, House Speaker Nancy Pelosi and others have criticized the statute for shielding websites for hosting misinformation, harassment, and abuse.

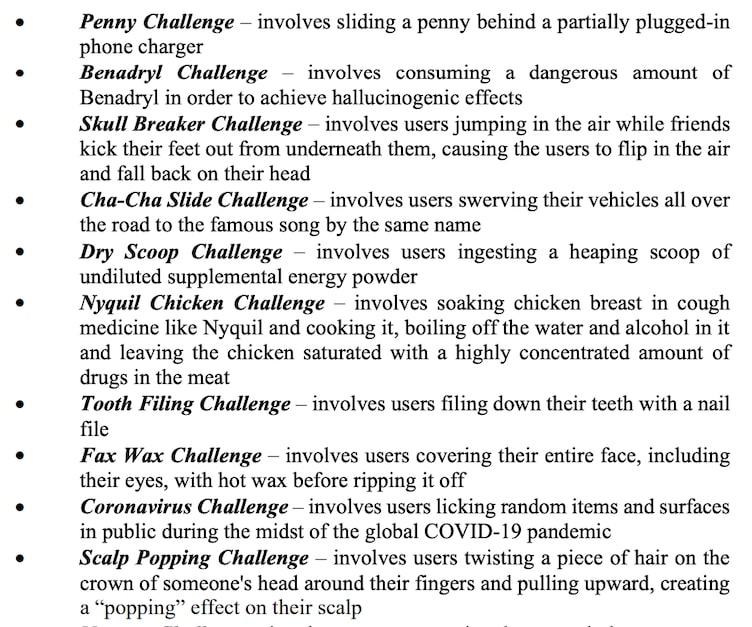

Loosening Section 230’s protections could make social media companies liable for a host of alleged damages. Late last year, the U.S. Surgeon General’s office released an advisory finding spikes in anxiety, depression and suicides coinciding with a dramatic uptick in social media usage among young people. The lawsuit provides a bullet-pointed list of dangerous activities that, like the “Blackout Challenge,” went viral on TikTok.

Only some of the TikTok challenges listed in the lawsuit.

The mother seeks punitive damages for six causes of action, including strict products liability, wrongful death, negligence and violations of state law.

Read the lawsuit below:

(Photos via lawsuit)